Why You Should Be Doing A/B Testing For Marketing

You have just devised a new marketing campaign you HOPE will spur a boost in attendance at the events you plan. The campaign, you believe, is bold and daring, but the truth is that you have absolutely no evidence it will work. The campaign is an experiment, aren't they always?

If you choose, you can launch that campaign and hope for the best, hope that your gamble will pay off. But how much time and money will that gamble cost? Can you afford it?

Fortunately, you have another choice --a choice that can dramatically reduce your expenses in time and money if your prospective event attendees are not receptive to your bold and daring marketing campaign AND a choice that can dramatically improve your confidence in launching; knowing that it WILL boost attendance at future events as well as sales, revenues, and profits.

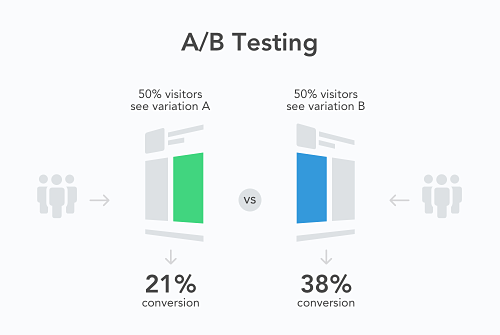

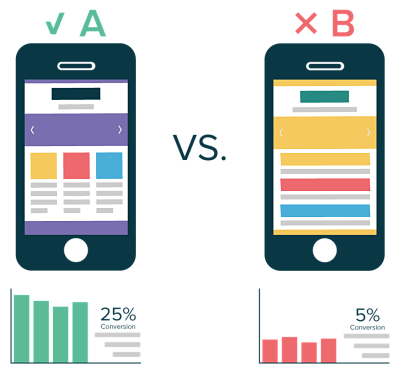

The second choice is A/B testing. Also known as split testing, A/B testing can help you decide whether to spend time and money on the first choice. It comprises testing “two versions of something to figure out which performs better” is how Harvard Business Review magazine summarizes the concept in the article “A Refresher on A/B Testing.”

The concept of A/B testing, comparing Version A to Version B, has been around for years, but to the marketing world it has become a very effective way of comparing campaigns and designs.

A/B testing can be used for many other scenarios that don‘t always pertain to one aspect in your marketing campaigns. They can also test numerous elements of a marketing campaign such as different slogans, headlines, text, images, CTAs, or even different ways of registering for an event.

Essentially, event planners can, for example, send a marketing pitch with Headline X to one group of people and a marketing pitch that is exactly the same except it has Headline Y instead of Headline X to a second group of people so they can test which headline is better. You can measure “better” in many ways, including which headline spurred more people to register for an event, click-through, bounce rates, impressions, etc. Then, you can send a marketing pitch with Photograph X to one group of people and the same marketing pitch with Photograph Y to a second group. And on and on and on, letting you compare a variety of different variables until you find the right combination.

“A/B testing is a fantastic method for figuring out the best online promotional and marketing strategies for your business,” wrote Neil Patel, the No. 2 ranked marketing influencerto watch in 2017 according to Forbes magazine. “It can be used to test everything from website copy to sales emails to search ads.”

13 Ideas For Your A/B Tests

The science behind A/B testing is like the science behind polling.

In polling, you can interview 10 million people in a nation of 300 million people at random and come up with results that aren’t close to what the actual voting results will be. That’s because the 10 million people might be completely different than the other 290 million people.

Pollsters are more accurate when they interview people similar to the population at large. Thus, they ask people they interview their age, income, education, political party, marital status, etc. They calculate what percentage of people they interviewed are this and that age, this and that income, etc. and compare the results to the population at large. Ideally, the people polled represent the population. If they aren’t, pollsters will adjust their data, essentially predicting the results as if their respondents accurately represented the populace.

Similarly, event planners can’t conduct valid A/B testing by testing two groups of people who differ completely from each other. The Harvard Business Review article, for example, notes that “…maybe mobile users of your website tend to click less on anything, compared with desktop users” so you have to “level the playing field” by dividing the users by mobile and desktop.

The bottom line is that you have to know who your prospective event attendees such as people who browse your website are.

There is also the question of statistical significance. In other words, you can't conduct a sample A/B test with results that have too large of a margin of error and/or have “false positive results” --results that show a marketing campaign or headline or photograph will work when they won’t. The topic of how to determine whether a sample is statistically significant is a complex one, too complex to explain in this article, but this Harvard Business Review article can help you.

What should you test via A/B testing? Here are 13 ideas of what could be making an impact on your marketing, gathered by our marketing team based on experience they have encountered.

* Headlines and blog titles

* Size and typefaces of fonts in headlines, copy, etc.

* Subject lines in emails

* Graphics and photographs

* Text of website copy, blogs, and emails

* Text of marketing and sales letters

* Layout of a website, email newsletter, etc.

* Text of calls to action (CTAs)

* Placement of buttons

* Colors of buttons, text, etc.

* Price of event

* Price of products and services sold at event

* Descriptions of events, speakers at events, topics, etc.

Metrics For Testing

We’re by no means suggesting that you test all the above items via A/B testing. That would be excessively time-consuming. YOU know better than we do the strengths and weaknesses of your marketing campaign and what elements you should consider changing. Be selective. “Make A and B significantly different,” recommends the BrightEdge article. “A/B testing can validate a decision to make a change or to keep things as they are, but only if the proposed update is noticeably different from the original (though still within site style.”

“Make A and B significantly different,” recommends the BrightEdge article. “A/B testing can validate a decision to make a change or to keep things as they are, but only if the proposed update is noticeably different from the original (though still within site style.”

I also recommend testing changes only when they are needed, run tests for at least one week, and “test changes to optimize your landing page headline and CTAs, adjusting the language to determine the best messaging” because “a headline change could net a 100 percent or better increase in conversions”(BrightEdge).

There are many metrics you can use to measure the effectiveness of the changes you are testing via A/B testing. They include click-through rates, website views, email responses, email open rates, conversions, event registrations, inquiries sent to your company and more.

There are also “hundreds of metrics” that can be measured by software, according to the Harvard “A Refresher on A/B Testing” article, but measuring too many metrics is one of the three major mistakes that people conducting A/B tests make. The other two are making decisions before the tests have been run for a long enough time and not doing enough retesting.